The competition between ChatGPT and Claude has intensified as both models expand into business, education, and creative sectors. With enterprises applying ChatGPT for large-scale automation and writers turning to Claude for refined prose, the statistical story behind their adoption reveals meaningful trends. From millions of users to token-window comparisons, the data helps organizations decide which model suits their strategy. Read on for the deep dive.

In the enterprise world, a global marketing team uses ChatGPT to automate customer-support workflows, while a software firm deploys Claude for high-precision code review and technical writing. These scenarios illustrate how usage statistics translate into real-world impact. Let’s explore the numbers.

Editor’s Choice

Here are seven standout statistics for 2025 that capture major differences and shifts between ChatGPT and Claude:

- The weekly active user base of ChatGPT reached approximately 800 million in mid-2025.

- ChatGPT’s monthly visits hit around 5.8 billion in September 2025.

- ChatGPT processed over 2.5 billion daily queries in 2025.

- Claude’s monthly active users exceeded 18.9 million as of March 2025, and some global datasets report over 30 million monthly users.

- A study of millions of Claude conversations found that 36% of occupations used the model for at least a quarter of their tasks.

- Comparative analysis shows Claude’s strength in creative and coding tasks, whereas ChatGPT leads in versatility and multimodal features.

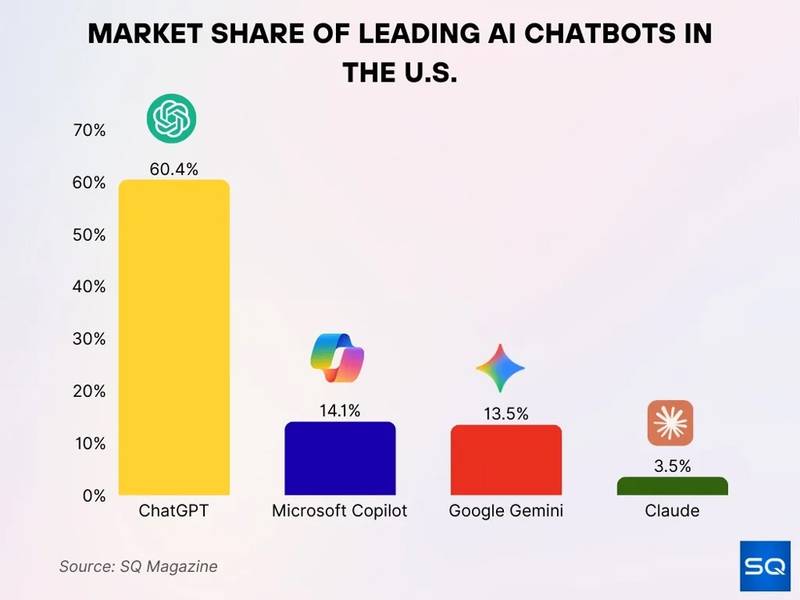

- In 2025 market-share estimates, ChatGPT held about 60.6% of the generative-AI tool segment.

Recent Developments

- In February 2025, ChatGPT’s weekly active users reached 400 million, up 33% since December 2024.

- By September 2025, ChatGPT’s weekly users doubled to around 800 million in seven months.

- OpenAI’s study included 1.5 million anonymized ChatGPT conversations, making it a top large language model dataset.

- Claude’s enterprise assistant segment share grew from 18% in 2024 to 29% in 2025.

- ChatGPT holds a 65% market share for multimodal AI features in 2025 competitive analyses.

- Claude accounts for approximately 35% of AI usage in coding and long-form reasoning workflows.

- LLM segmentation: 70% of consumer AI tasks by ChatGPT vs. 30% professional by Claude in 2025.

- OpenAI increased the token capacity for ChatGPT by 40% in 2025, enhancing the context window size.

- Concerns about AI output reliability rose by 15% among users in surveys during 2025.

- Enterprise tool integrations with ChatGPT and Claude grew by 50% throughout 2025.

Model Overview

- Claude Sonnet 4 supports a context window up to 200,000 tokens (approx. 150,000 words) in 2025.

- ChatGPT’s most widely available advanced tiers support up to 128,000 tokens context window; larger windows may be offered to enterprise accounts, but public limits are 128K tokens.

- Context window size for Claude Sonnet 4 increased by 5x recently, reaching 1 million tokens in public preview.

- ChatGPT.com registers approximately 5.24 billion monthly visits as of 2025.

- Users send roughly 2.5 billion prompts daily to ChatGPT services.

- GPT-4.1 is about 40% cheaper to run compared to Claude 3.7 in similar code review tasks.

- Claude Sonnet 4 and 4.5 models offer context windows of 1 million tokens in beta access for select organizational tiers.

- ChatGPT ecosystem includes a custom GPT marketplace and multimodal capabilities (text, image, voice).

- Claude emphasizes refined text generation, sophisticated reasoning, and stable code output for long-form and specialized workflows.

Market Share and Adoption Rates

- In August 2025, ChatGPT held 60.4% of the U.S. generative AI chatbot market share, far ahead of Claude at 3.5%.

- Microsoft Copilot and Google Gemini came in at 14.1% and 13.5%, respectively.

- Claude is showing the strongest quarterly growth among emerging rivals, with a 14% increase in U.S. market share quarter-over-quarter.

- In the enterprise assistant segment, Claude’s share climbed from 18% in 2024 to 29% in 2025.

Performance Benchmarks

- According to a comparison article, Claude Sonnet 4 achieved an internal benchmark result of 77.2% on one test suite, while earlier Claude versions scored 74.5%.

- In the empirical “LLM4DS” paper, both ChatGPT and Claude exceeded a 50% baseline success rate for data-science code generation; neither exceeded 70%.

- That same study found ChatGPT had more consistent performance across difficulty levels, whereas Claude’s success rate varied more with complexity.

- A usage-analysis study of Claude found that in tasks mapped to the U.S. occupational taxonomy, software development and writing tasks made up nearly 50% of usage.

- The same study indicated that about 36% of occupations used Claude for at least a quarter of their task load.

- According to one review, ChatGPT’s GPT-4.5 model reduced so-called hallucination rates from 61.8% (prior version) to 37.1% in early 2025.

- Comparative feature review places ChatGPT ahead in terms of context-window size and advanced features (image/video generation).

- In contrast, Claude is described as having a more refined “text-first” approach, which may yield better output quality in specific domains.

Context Window Size

- ChatGPT’s advanced tiers support up to 128,000 tokens (≈ 96,000 words) according to a 2025 comparison.

- Claude Sonnet 4/4.5 supports 200,000 tokens for standard users and up to 1 million tokens for enterprise and beta channels as of November 2025.

- Some enterprise versions of Claude claim a context window up to 1 million tokens (≈ 750,000 words) in beta or partner channels.

- Comparatively, ChatGPT in its free/standard tier remains limited (e.g., 8k or 32k tokens) for many user accounts.

- A larger context window generally translates into better handling of long documents, full-report analysis, or multi-file input without splitting.

- The gap in context window size gives Claude a distinct advantage in workflows involving long-form inputs, while ChatGPT remains stronger in more general, shorter-prompt tasks.

- Users report that hitting the context limit in ChatGPT is a common pain point when dealing with extensive source text.

- For teams concerned with long-document summarization or large datasets, choosing a model with a > 200,000 token window becomes a strategic decision.

- The effective benefit of extremely large windows still depends on prompt engineering, chunking strategy, and cost per token for processing.

- Enterprises increasingly benchmark not just “how many tokens” but “how many coherent pages” the model can carry in one interaction, making the window size a key purchasing metric.

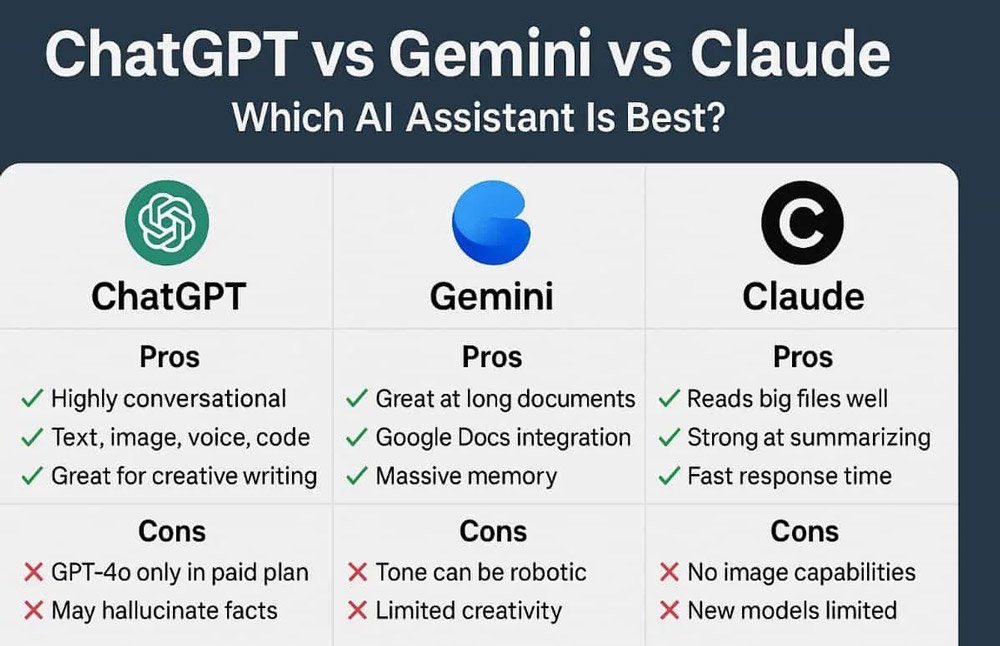

ChatGPT vs Gemini vs Claude: Key Pros and Cons

- ChatGPT excels at being highly conversational, supports text, image, voice, and code, and is great for creative writing.

- Limitations of ChatGPT include GPT-4 only in the paid plan and a tendency to hallucinate facts.

- Gemini performs strongly on long documents, offers Google Docs integration, and provides massive memory for context.

- Weaknesses of Gemini are that its tone can feel robotic, and it shows limited creativity.

- Claude stands out for handling big files, delivering fast response times, and being strong at summarizing.

- Drawbacks of Claude include no image capabilities and fewer new models compared to rivals.

Language Support

- ChatGPT officially supports 58 languages, with practical understanding of over 95 languages.

- ChatGPT supports major programming languages, including Python, JavaScript, Java, C++, Ruby, PHP, Swift, Go, TypeScript, HTML/CSS, SQL, and R.

- ChatGPT covers approximately 4.5 billion native speakers across its supported 95+ languages.

- Claude maintains above 90% reasoning accuracy in over 8 languages, including French, Russian, Chinese, Spanish, and Bengali.

- Claude’s relative language performance ranges from 100% in English to around 80% in Hindi and lower (46% in Yoruba).

- ChatGPT’s language accuracy and fluency drop significantly for low-resource languages with less digital presence.

- More than 80 languages are claimed by AI tools, but real proficiency varies greatly depending on language resources.

- Organizations targeting APAC, Latin America, and Africa must evaluate model support explicitly due to limitations in low-resource regional languages.

- Indian regional languages still experience lag in AI tool support, impacting adoption in multilingual global deployments.

- Language support is a key differentiator in use cases like customer service, localization, and global content creation workflows.

Coding and Development Capabilities

- Developers report that AI-assisted coding reduces debugging time by 35% on average.

- Teams using generative AI for code suggestions experience a 50% increase in implementation speed for routine tasks.

- ChatGPT remains widely used for full-function code generation, while Claude often produces cleaner and more readable logic for complex prompts.

- In engineering workflows, more than half of daily code reviews are now partially automated with generative AI tools.

- AI-generated code snippets reduce documentation lookup time by 40%, streamlining developer productivity.

- Developers note that Claude’s larger context windows improve the accuracy of multi-file reasoning.

- ChatGPT continues to lead in troubleshooting support due to broad training coverage across languages and frameworks.

- AI coding assistants help teams reduce production-level defects by 20 to 30% through early pattern detection.

- Front-end developers frequently rely on AI tools for UI component generation, which cuts prototyping cycles nearly in half.

- Backend teams cite stronger benefits in workload automation, especially in error handling, environment setup, and integration scaffolding.

Data Analysis and Interpretation

- AI-assisted data exploration reduces initial analysis time by 45%.

- Generative AI automates up to 60% of repetitive analytics tasks like data cleaning and labeling.

- Advanced reasoning models deliver more consistent multi-step analytical breakdowns.

- ChatGPT’s multimodal capabilities interpret visual charts and tables for exploratory analysis.

- AI-driven interpretation tools reduce reporting backlogs by 30-40% in enterprises.

- AI generates first-draft KPI summaries, cutting down manual dashboard interpretation needs by over 50%.

- Large context windows enable ingestion of multi-sheet workbooks and extensive documents.

- Generative AI translates complex statistics into plain language, improving accessibility for non-technical users by 40%.

- Teams using AI report 25% fewer manual errors in early-stage data interpretation.

- Executives use AI-generated scenario models for faster decision-making in 80% of weekly planning cycles.

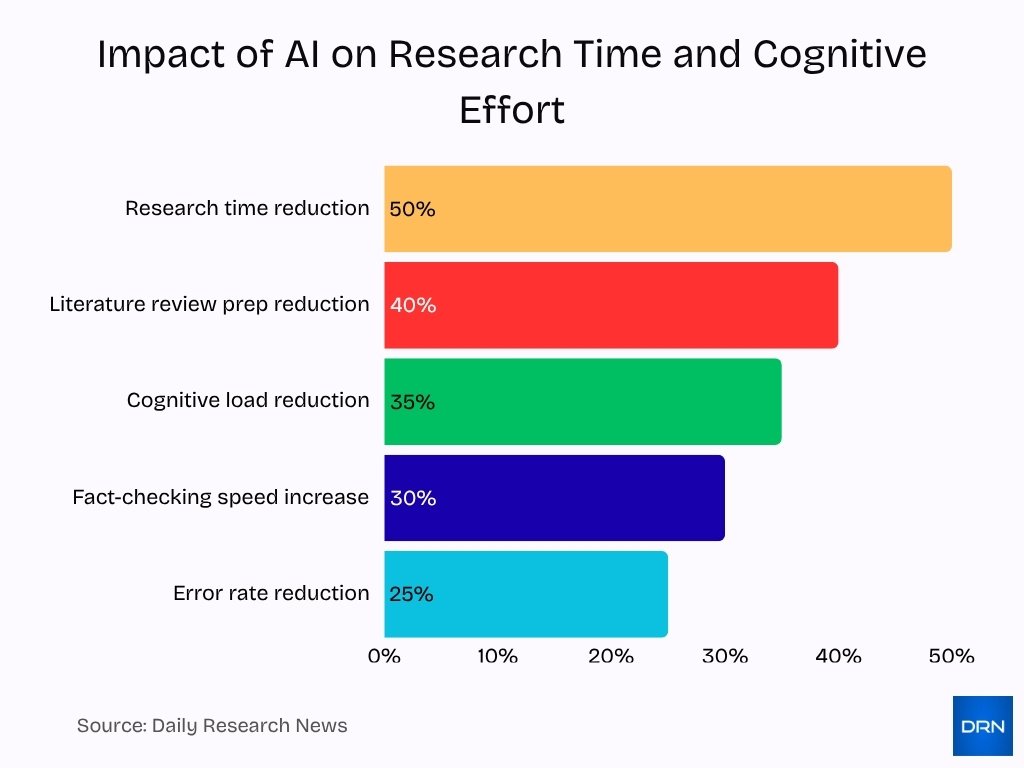

Research and Information Accuracy

- AI reduces preliminary research time by 50% for multi-source scanning.

- Structured prompts lower error rates in generated summaries by up to 25%.

- 65% of users prefer ChatGPT for broad-topic research tasks.

- Claude is favored in 40% of cases requiring detailed reasoning and longer context.

- AI-assisted fact-checking flags inconsistencies 30% faster than manual review.

- Analysts pair AI output with human checks in 70% of professional research tasks.

- Generative AI reduces cognitive load in multi-source comparisons by 35%.

- Specialized models improve domain-specific information retrieval accuracy by 45%.

- AI drafting cuts literature review prep time by 40% in academic and corporate settings.

- Experts advise 90% of policy and finance research combines AI with manual validation.

Creative Writing Strengths

- A meta-analysis of 28 studies found that humans paired with Gen AI outperform humans alone, with an effect size of g = 0.27.

- The same study found that Gen AI on its own showed g = -0.05 compared to human creative performance, meaning AI alone did not outperform humans in creative tasks.

- Research found that while AI models excel in divergent and convergent thinking tasks, they underperform in creative writing compared to humans.

- A survey of authors reported that 45% of authors used generative AI in their writing or marketing workflows as of 2025.

- Reader studies show that 84% of readers cannot distinguish between AI- and human-written content in blind tests.

- Businesses using AI writing tools report an average 59% reduction in content-creation time.

- These same businesses report a 77% increase in content output volume after adopting AI.

- AI-optimized content led to 32% higher engagement rates and 47% higher conversion rates in marketing contexts.

- A specialised paper noted that creative capabilities are culturally bound, resulting in an 89-point gap between Chinese and English performance.

- Despite progress, current models still rely on learned patterns rather than genuine human-style creativity.

Summarization and Editing Skills

- Major models such as ChatGPT and Claude are reported to handle long-form summarization and editing with strong fluency compared to their earlier versions.

- 71.7% of content marketers use AI for outlining, 68% for ideation, and 57.4% for drafting.

- Companies report that repetitive writing tasks are now handled by AI in about 68% of cases.

- AI-assisted workflows allow teams to test 3.7× more variations of their messaging than human-only teams.

- Average content production cost dropped by about 42% after adopting AI writing platforms.

- Readers are unable to distinguish AI vs human writing in 84% of summary samples.

- Editing time decreased significantly for organisations that used AI for editing and summarization workflows.

- Larger context windows allow models to handle multi-page documents more effectively during summarization.

- Enterprises now rely heavily on AI for tasks like rewriting, grammar checks, and style normalization.

- While model-specific benchmark scores remain limited, stronger reasoning models tend to deliver better summarization accuracy.

API and Integration Options

- 23% of organizations are scaling agentic AI systems in 2025.

- 39% of organizations are experimenting with agentic AI systems.

- Flagship AI models cost up to $15 input and $75 output per 1 million tokens.

- Mid-tier AI API models cost approximately $3 input and $15 output per 1 million tokens.

- Over 54% of enterprises have significant AI integration in core business processes.

- 87% of large enterprises implemented AI solutions as of 2025.

- More than 82% of organizations have adopted some level of an API-first approach.

- Developers prioritize latency, documentation quality, context limits, and cost per token when selecting AI APIs.

- Agentic AI platforms can reduce manual workloads by up to 60% in workflow automation.

- Enterprise AI API market value was over $3.3 billion in 2024, and is expected to grow rapidly by 2032.

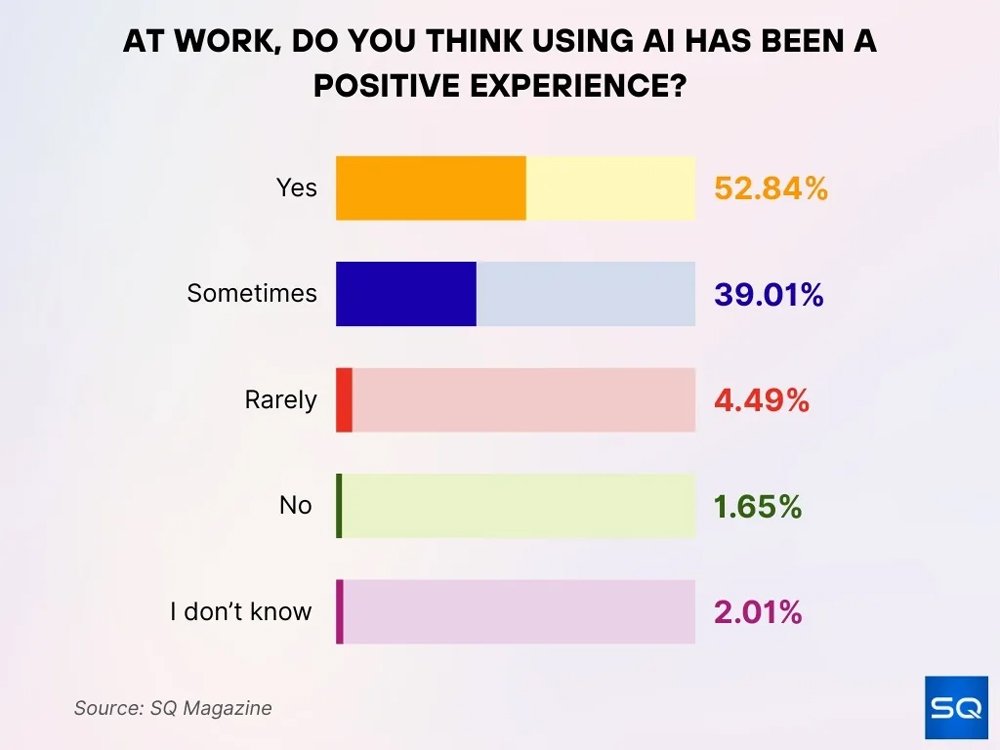

Employee Views on AI at Work

- 52.84% of employees indicate that their workplace experience with AI has been positive overall.

- 39.01% mention that AI proves helpful only at times, reflecting a mixed yet mostly positive level of adoption.

- Only 4.49% state that AI offers benefits infrequently within their daily responsibilities.

- A very small portion, just 1.65%, feels that the presence of AI in the workplace has resulted in a negative experience.

- 2.01% of respondents express uncertainty regarding how AI is influencing their job roles.

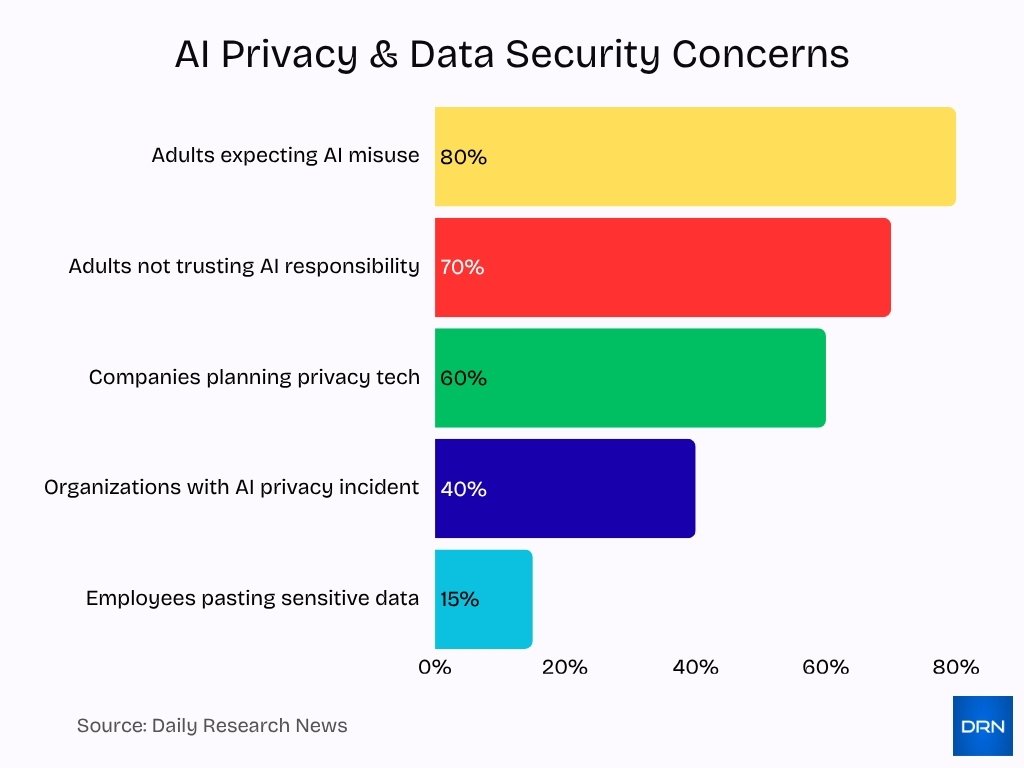

Privacy and Data Security

- Around 40% of organizations experienced an AI-related privacy incident in 2025.

- Approximately 15% of employees admitted to pasting sensitive data into public AI tools.

- Around 70% of adults do not trust companies to use AI responsibly, and >80% expect misuse of their data.

- Over 26 state-level initiatives in the U.S. focus on AI regulation and privacy in 2025.

- More than 60% of companies plan to deploy privacy-enhancing technologies by the end of 2025.

- Some enterprises prefer open-source LLMs because they allow in-house deployments with tighter control.

- Data security has become a major differentiator when selecting commercial AI models.

- Many organisations now demand zero-retention modes and regional data residency guarantees.

- Enterprise offerings for leading AI models emphasise compliance frameworks, audit logs, and governance tooling.

- Lack of security readiness continues to be a major barrier to broad AI deployment.

Pricing Comparison

- Premium Claude Opus models cost $15 input / $75 output per 1M tokens.

- Mid-tier AI models cost roughly $3 input / $15 output per 1M tokens.

- Some lighter AI models are priced as low as $0.25 input / $2 output per 1M tokens.

- The generative AI market is valued at $37.9 billion in 2025.

- The generative AI market is expected to surpass $66.6 billion by the end of 2025.

- ChatGPT API costs approximately $0.03 per 1,000 input tokens and $0.06 per 1,000 output tokens.

- Enterprises consider the Levelized Cost of Artificial Intelligence (LCOAI) to benchmark cost efficiency.

- Some AI vendors offer competitive pricing like $1 input / $2 output per 1M tokens.

- Higher-priced models may cost up to $75 output per 1M tokens but offer enhanced accuracy.

- AI pricing is rapidly changing with new plans and enterprise bundles frequently introduced.

Frequently Asked Questions (FAQs)

ChatGPT reports about 800 million weekly active users as of September 2025.

Claude holds around 29% market share in the enterprise AI assistant segment in 2025.

ChatGPT processes over 2.5 billion daily queries worldwide in 2025.

Approximately 36% of occupations used Claude for at least 25% of their tasks.

Conclusion

The differences between models like ChatGPT and Claude are becoming less about raw novelty and more about fit for purpose. From creative writing and summarisation to integration and security, organisations must align their choice of model with their workflows, governance requirements, and costs.

While Claude offers advantages in long-form reasoning and certain enterprise features, ChatGPT continues to dominate in scale, ecosystem depth, and broad applicability. The key is not which model is universally better, but which model best matches the specific demands of your organisation. With the statistics and insights presented throughout this article, decision-makers can navigate that choice with clarity.