Misinformation on social media has evolved from a nuisance into a systemic challenge that touches journalism, public health, politics, and corporate reputation. In sectors such as public health (e.g., anti-vaccine claims) and financial services (e.g., investment scams relying on false posts), misleading information can drive real-world harm. As platforms scale globally, the impact of false and misleading content becomes harder to contain. Explore the full article to understand how the problem is mapped, measured, and addressed.

Editor’s Choice

Here are seven standout statistics that illustrate the breadth and urgency of social media misinformation:

- According to the World Economic Forum, misinformation and disinformation remain the top short-term global risk for the second consecutive year.

- A 2025 study found that 68.8% of young adults reported at least occasional exposure to fake news on social networks.

- The Pew Research Center reports that 38% of U.S. adults say they regularly get news from Facebook, and 35% from YouTube as of August 2025.

- Two out of three Bangladeshi youth (66%) say misinformation is their biggest source of stress on social media, according to UNICEF’s 2025 survey.

- A recent study found that when posts are flagged as misleading on social media, they receive 46.1% fewer reposts, 44.1% fewer likes, 21.9% fewer replies, and 13.5% fewer views.

- A survey by the European Commission found 66% of respondents believe they’ve been exposed to disinformation at least sometimes in the past week.

- A study analysing U.S. and international news consumption found that despite rising awareness of false content, a large share of users admit they have unknowingly shared fake news.

Recent Developments

- The 2025 Global Risks Report ranked misinformation as the top immediate threat to governance and social cohesion over the next two years.

- Trust in digital news sources dropped to 40% in 2025, marking a continued decline according to the Digital News Report.

- Real-time labeling of flagged posts led to a 15-30% reduction in engagement on social media platforms.

- Over 90% of health misinformation on wellness topics is spread by influencers lacking formal qualifications.

- Frequent exposure to misinformation correlates with a strong increase (p < 0.005) in stress and anxiety levels.

- Scholarly output on social media misinformation includes 560 documents published from 2005 to 2025.

- New regulatory frameworks require transparency and accountability, with 30% more platforms adopting moderation policies in 2025.

- 58% of the public find discerning truth from lies online increasingly difficult, fueling misinformation anxiety.

- Influencer-driven nutrition misinformation constitutes 29% carnivore diet myths and 24.5% general health falsehoods.

- Fact-checking and community-note systems rank as top verification tools, used by 38% and 25% of the public, respectively.

Overview of Social Media Misinformation

- Globally, there are about 4.9 billion social media users, creating a vast audience for misleading or false content.

- Misinformation includes false, inaccurate, or misleading content shared without intent to harm, while disinformation implies deliberate intent.

- Research emphasizes that social platforms amplify false narratives more than they originate them.

- A large-scale study of young people foundthat 68.8% reported encountering fake news at least occasionally on social networks.

- Algorithmic structures favor engaging content, giving false or sensational posts an advantage.

- Social media’s ease of sharing means false content can travel faster than corrections or fact-checks.

- Misinformation spans politics, health, climate, finance, and more, making it a broad, multi-issue problem.

Social Media Platforms: Difficulty in Spotting Fake News

- TikTok emerges as the platform where spotting fake news feels most challenging, with 27% of participants saying it is very or somewhat difficult to determine which news is trustworthy.

- X (formerly Twitter) comes next, as 24% report that separating real from fake news is difficult, while 35% maintain a neutral stance.

- On Facebook, 21% of users note difficulty in identifying credible information, contrasted by 51% who consider it easy, highlighting a clear divide in perception.

- Instagram reflects a comparable pattern, with 20% indicating difficulty and 49% stating it is very or somewhat easy to judge news reliability.

- LinkedIn tends to be viewed more neutrally, with 41% saying they neither trust nor distrust what they see, and only 18% expressing difficulty.

- On WhatsApp, 17% report struggling to detect fake news, whereas 51% feel it is easy to recognize accurate content.

- YouTube appears more dependable, as just 17% say it is difficult, and 54%, the second-highest share rate the platform is easy for verifying information.

- Google Search stands out as the most trusted source, with only 13% experiencing difficulty and 60% stating it is easy to identify reliable details.

Frequency of Encountering Misinformation Online

- A 2025 study found that 68.8% of young adults reported encountering fake news at least occasionally.

- 66% of respondents reported exposure to disinformation at least sometimes in the past week.

- Earlier U.S. surveys show that 38.2% had unknowingly shared fake news.

- A youth poll found two-in-three respondents citing misinformation as their top source of stress on social media.

- Increased dependence on social media for news raises the likelihood of frequent encounters with false content.

- Research shows repeated exposure through algorithmic loops increases belief reinforcement.

- Influencer wellness content often misleads audiences, increasing mis-exposure.

- Users with lower media literacy or digital access may encounter misinformation more frequently.

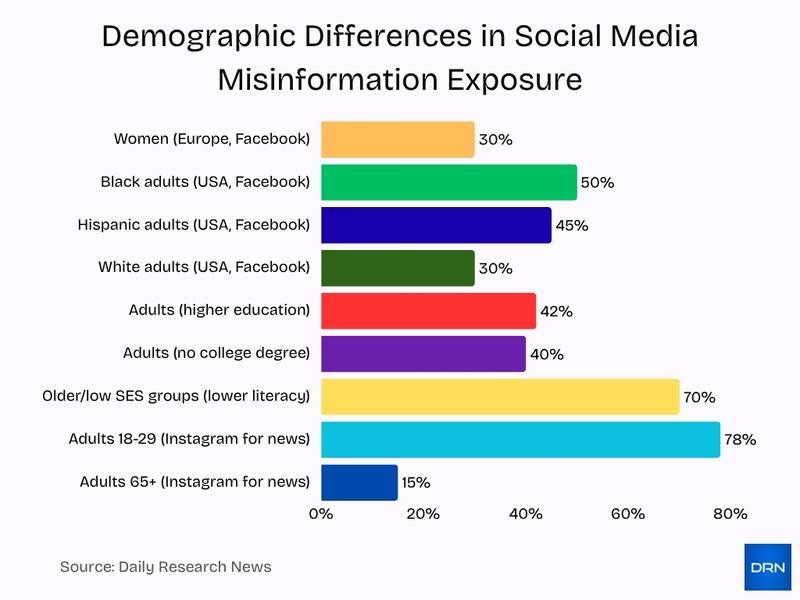

Demographic Differences in Misinformation Impact

- 48% of women get regular news from Facebook and Instagram, compared to 35% of men.

- 78% of adults aged 18-29 use Instagram for news, versus only 15% of those 65+.

- Adults with higher education still show vulnerability: 42% report difficulty discerning misinformation.

- Women aged 35–44 are the most exposed group to misinformation on Facebook in Europe, representing over 30% of total exposure.

- 70% of older adults and low socio-economic groups have lower media literacy, increasing misinformation risk.

- Black and Hispanic adults get news from Facebook at rates of 50% and 45%, respectively, higher than White adults at 30%.

- Adults without a college degree are 40% more likely to get news on Facebook than college graduates.

- Younger users aged 18-29 face 3 to 5 times higher misinformation exposure due to greater overall use.

- Demographic studies show dual risk where high exposure to misinformation coincides with high vulnerability, especially among women and older adults.

- Social media misinformation exposure peaks at a median age of 41, with women more frequently impacted than men in key age brackets.

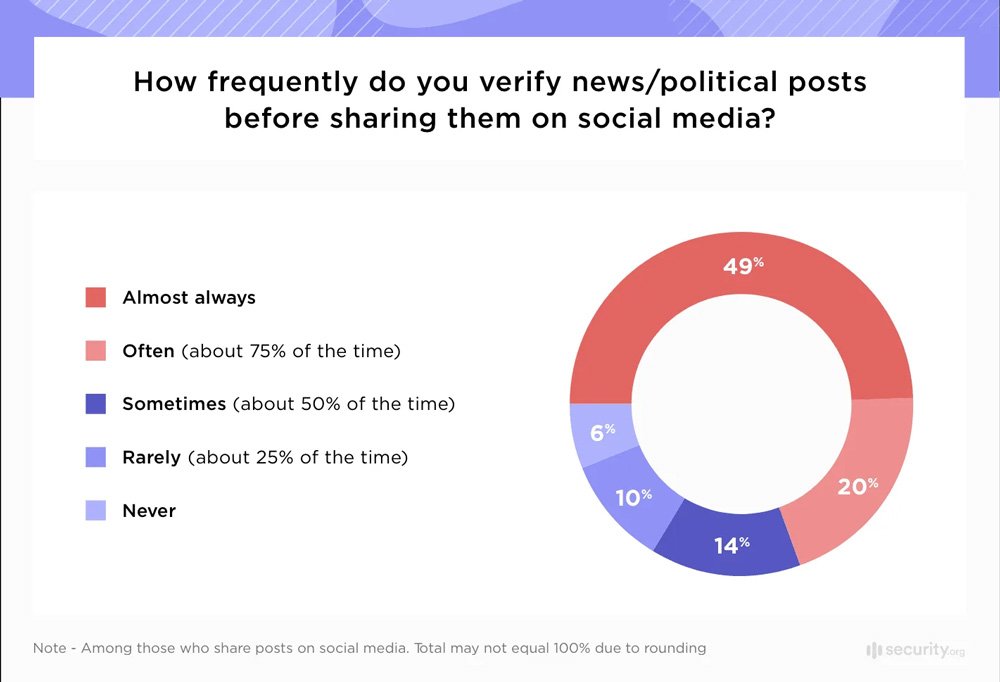

How Often People Verify News or Political Posts Before Sharing (Social Media Behavior Insight)

- 49% of users report that they almost always verify news or political posts before sharing, representing the largest group and signaling that nearly half claim to engage in consistent fact-checking.

- 20% verify content often, checking facts about 75% of the time, showing that these users frequently confirm information, but not as regularly as the leading group.

- 14% verify only sometimes, meaning around 50% of the time, reflecting a middle-ground pattern of occasional but inconsistent verification.

- 10% of respondents state they verify rarely, or roughly 25% of the time, indicating a less cautious group that may contribute more to unverified sharing.

- 6% admit they never verify news or political posts before sharing, forming a small segment that increases the risk of spreading misinformation online.

Health-Related Misinformation on Social Media

- Research confirms that health misinformation is widespread and harms public health behavior.

- Misinformation undermines trust in experts and increases risk behavior.

- Health misinformation is 70% more likely to be shared than factual information.

- Over half of popular mental-health videos on TikTok contain misleading advice.

- Influencer-promoted medical tests were overwhelmingly promotional in tone, with harms rarely mentioned.

- Wellness influencers significantly contribute to misleading health claims.

- Fact-checking alone struggles to contain the volume of false health content.

- During crises, misinformation reduces preventative behavior and delays treatment.

- Global social-media usage exceeding 5.24 billion users broadens misinformation reach.

Climate Change Misinformation and Denialism

- 43% of respondents in India saw false or misleading climate change information online in one week.

- Climate denial claims attacking solutions represent 70% of climate denial narratives on YouTube.

- There was a 267% increase in climate-related disinformation ahead of a recent global summit.

- 12% of misinformation is attributed to politicians and parties, with the government responsible for 11%.

- Climate misinformation is reported as the second biggest global threat after extreme weather in 2024.

- Social media algorithms amplify sensational and controversial climate misinformation content.

- Conservative media outlets hold the most influence in spreading climate denial disinformation.

- Around 74% of people trust scientists for climate information despite widespread misinformation.

- Nearly half (46%) of people mistakenly believe scientists disagree on climate change.

- Online misinformation slows public action by eroding climate solution support and policy communication.

Misinformation During Major Events, e.g., Riots, Emergencies

- During crises, misinformation spikes sharply, complicating emergency response.

- Engagement with misinformation is higher in crisis contexts compared with normal periods.

- Roughly 20% of crisis-event chatter may originate from bots.

- Younger users dependent on social media face greater exposure during crises.

- Flagging reduces engagement but often arrives after initial viral spread.

- False crisis narratives circulate heavily via private messaging groups.

- Crisis misinformation can reduce trust in institutions and increase harmful behavior.

- Emergency responders struggle to intervene quickly enough to counter viral falsehoods.

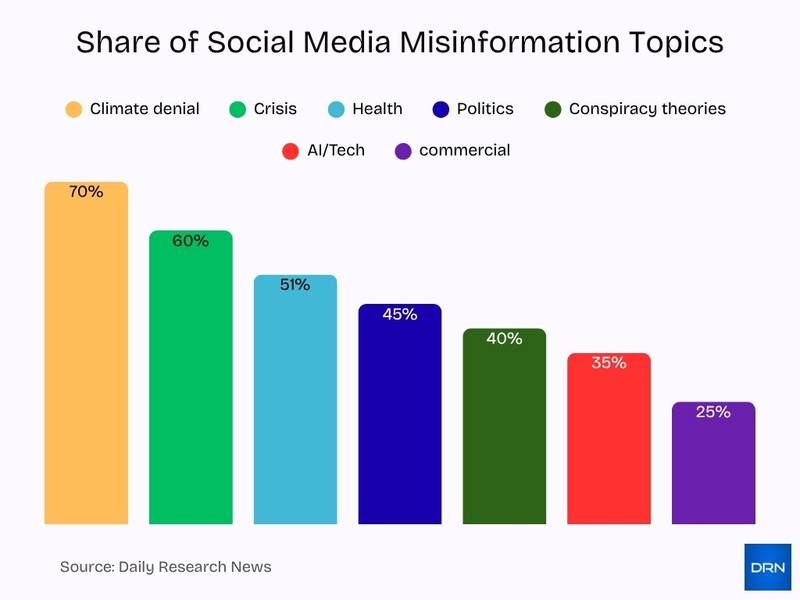

Most Common Topics of Social Media Misinformation

- 45% of social media misinformation relates to election and political topics.

- Health misinformation accounts for up to 51% of vaccine-related posts on social platforms.

- Climate denial misinformation comprises about 70% of climate-related false claims on YouTube.

- During crises, event-based misinformation spikes by more than 60% on social media.

- AI and tech misinformation has grown by 35% year-over-year across major platforms.

- Influencer-driven commercial misinformation rose by 25% in the last two years.

- Conspiracy theories consistently account for roughly 40% of all misinformation topics.

- Political, health, and science topics attract misinformation rates up to 50% higher than average.

- Topic-share data reveals that 66% of users report exposure to misinformation sometimes or often.

- Misinformation on social media spreads with engagement rates 11-15% higher than factual content.

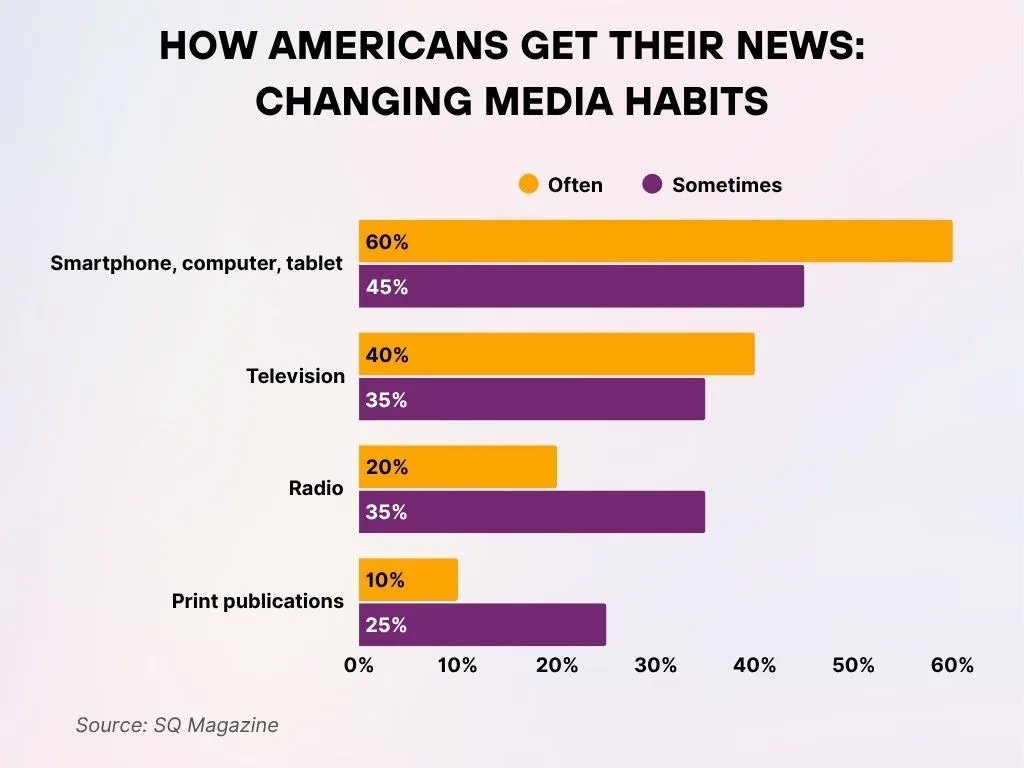

How Americans Get Their News: Changing Media Habits

- 60% of Americans regularly obtain their news through a smartphone, computer, or tablet.

- 45% report that they occasionally rely on smartphones or other digital devices for news consumption.

- 40% of respondents frequently watch television for news, with TV continuing as a solid traditional source despite trailing digital platforms.

- 35% of people intermittently depend on television as their news source.

- 20% of Americans routinely listen to the radio for their news updates.

- 35% indicate they periodically turn to the radio to access their news.

- 10% of respondents consistently read print publications to stay informed.

- 25% say they, at times, read newspapers or magazines for news.

Deepfakes and Digitally Altered Content

- Deepfake files could reach 8 million in 2025, up from 500,000 in 2023.

- 179 deepfake incidents were reported in Q1 2025, a double-digit increase over all of 2024.

- Human deepfake detection accuracy averages just 24.5%.

- Deepfake-enabled fraud rose 3,000% in recent years.

- AI-generated misinformation is significantly more viral than conventional content.

- Synthetic media bypasses typical critical filtering mechanisms.

- Deepfakes now account for 6.5% of all fraud attacks.

- Generative-AI market growth suggests misinformation risks will grow accordingly.

Role of Artificial Intelligence in Spreading Misinformation

- AI-generated posts are 3 times more likely to go viral than non-AI content on social media platforms.

- Over 50% of long-form LinkedIn posts are likely created using AI tools as of 2024.

- AI-driven misinformation rose by 146% on Reddit from 2021 to 2024.

- Exposure to realistic AI-created images increases belief in false headlines by approximately 30%.

- Generative-AI-enabled fraud is projected to reach $40 billion in the U.S. by 2027.

- Around 25% of Twitter activity consists of bot-driven accounts, many spreading AI-generated misinformation.

- Users can only distinguish AI-generated text from human-written content about 53% of the time in tests.

- AI reduces the cost of creating misinformation, enabling the production of thousands of false posts daily.

- About 80% of fact-checked misinformation claims involve media such as AI-altered images or videos.

- Algorithms amplify AI-generated misinformation, with 34% of users reached by AI-enhanced propaganda campaigns globally.

Bot and Automated Account Influence on Misinformation Spread

- Roughly 20% of crisis-related online chatter comes from bots.

- Bots use simplistic language cues to amplify content.

- Around 14.8% of accounts in a major dataset were identified as likely bots.

- Bots manipulate sentiment, amplify echo chambers, and distort discourse.

- Automated accounts may be responsible for 25% or more of misinformation interactions.

- Bots create artificial consensus through mass posting.

- Bot activity shapes initial user exposure and platform trending signals.

- Platforms often fail to detect coordinated bot networks at scale.

- Bots are increasingly viewed as a vector for election-related misinformation.

Consequences of Misinformation, Public Trust, and Behavior

- 72% of global internet users report monthly encounters with misinformation.

- 45% of U.S. adults say they struggle to determine what is true or false online.

- Repeated exposure reduces trust in media and institutions.

- Health misinformation reduces vaccination uptake and delays medical treatment.

- 70% of Americans report reduced confidence in government due to fake news.

- Businesses face reputation and investment risks due to misinformation.

- False content increases anxiety and reduces perceived control.

- Repeated sharing of falsehoods decreases engagement with credible sources.

- People become passive when they feel overwhelmed by false information.

Effectiveness of Fact-Checking and Moderation Efforts

- Community-based fact-checking can reduce misinformation spread by 62%.

- Fact-checks with source links are 2.33 times more likely to be considered helpful.

- Fact-checking reduces false beliefs, though effects on sharing are smaller; sharing decreases by 17% with warning labels.

- About 32 countries introduced or amended misinformation laws by 2025.

- The Digital Services Act establishes strict content-moderation rules affecting platforms in the EU.

- Only 19% of global users have completed media-literacy training.

- Fact-checking labels help, but often arrive too late to prevent misinformation from spreading.

- The effectiveness of moderation varies widely across platforms, with some reducing false content by 27% through warning labels.

- Policymakers are debating moderation bias, with concerns over fairness and the sustainability of fact-checking programs.

- Social media moderation efforts have shown that the belief in false headlines can drop by 13% when warning labels are applied, even among distrustful users.

User Actions and Responses to Questionable Content

- 49% of users verify news or political posts before sharing.

- 20% verify content often.

- 14% verify content only sometimes.

- 38% of U.S. adults admit to unknowingly sharing fake news previously.

- Younger users encounter misinformation more but verify less.

- Media-literacy training improves verification habits.

- 65% of U.S. parents now monitor children’s social-media use due to misinformation concerns.

- 57% of users believe platforms prioritize engagement over accuracy.

- Only 1 in 10 misinformation posts are flagged by users.

Regulatory and Policy Perspectives on Misinformation

- The DSA requires very large platforms to publish transparency reports, with only 116 platforms registered by January 2025.

- In six months, these platforms submitted over 9.4 billion statements of reason related to content moderation.

- The U.S. Digital Integrity Act proposes mandatory independent audits of algorithms used by large digital platforms.

- Between 2010 and 2022, 80 countries enacted or updated misinformation laws, including 32 countries recently for platform governance.

- Over 4.22 crore rural citizens in India received digital literacy certifications by 2022, highlighting policy focus on literacy.

- Regulations on bot labeling and AI accountability are advancing, with calls to detect and label bot accounts proactively.

- Industry self-regulation for misinformation remains inconsistent, often dependent on credible threats of government regulation.

- Cross-border misinformation flows drive the need for global cooperation, with multilateral bodies pushing unified frameworks.

- Some governments plan mandatory fact-checking disclosures and transparency obligations for AI-generated content.

- Experts note less policy emphasis on user behavior compared to content production in combating misinformation.

Frequently Asked Questions (FAQs)

Approximately 38% of U.S. adults say they regularly get news from Facebook.

Roughly 35% of U.S. adults report regularly getting news from YouTube.

In the Eurobarometer survey, about 66% of respondents believed they had been exposed to disinformation at least sometimes in the prior week.

More than 50% of the top 100 TikTok videos under the #mentalhealthtips hashtag contained some form of misinformation.

Conclusion

In summary, misinformation on social media remains a multifaceted challenge with global reach, technological sophistication, and significant real-world consequences. From the rising role of AI in creating and amplifying false content, to automated accounts shaping public discourse, to the degraded trust in institutions and platforms, each facet deepens the complexity. Fact-checking and moderation efforts show promise, but they often arrive late or incompletely, and user responses remain inconsistent.

Regulatory and policy frameworks are advancing, yet no single solution exists. As you engage with social platforms in news, health, politics, or the environment, being aware, verifying, and critically assessing content matters more than ever.